-

The cosmic curvature

$ \Omega_{K,0} $ is an important parameter that is related to many fundamental problems in modern cosmology. Knowing whether the universe is spatially open ($ \Omega_{K,0}>0 $ ), flat ($ \Omega_{K,0}=0 $ ), or closed ($ \Omega_{K,0}<0 $ ) is crucial for us to understand its evolution and the property of dark energy. A flat universe is strongly favored by some cosmological observations. For instance, the Planck 2018 cosmic microwave background (CMB) observations combined with the baryon acoustic oscillations (BAO) measurements give$ \Omega_{K,0}=0.0007\pm0.0019 $ , suggesting that our universe is flat to a$ 1\sigma $ error of$ 2\times 10^{-3} $ [1]. However, it was found that the Planck TT, TE, EE+lowE power spectra data alone favor a slightly closed universe, that is,$ \Omega_{K,0}=-0.044_{-0.015}^{+0.018} $ [1–4]. This deviation from a flat universe is interpreted as the undetected systematics, statistical fluctuation, or new physics beyond the Λ cold dark matter (ΛCDM) model. Efstathiou and Gratton [5] recently revisited the issue and claimed that the Planck data are still consistent with a flat universe. Whether this crisis really exists is still under debate.It should be pointed out that most of the curvature parameter estimations assume a specific cosmological model. However, there is a strong degeneracy between the curvature parameter and the dark energy equation of state

$ w(z) $ ; thus, it is difficult to constrain them simultaneously, which hinders our understanding of dark energy. Therefore, it is necessary to measure the cosmic curvature in a cosmological model-independent way. For this purpose, many novel and feasible methods have been proposed; e.g., see Refs. [6–36].In Ref. [37], Cai et al. proposed a model-independent method to test whether the cosmic curvature deviates from zero. They adopted the observational data to reconstruct the reduced Hubble parameter

$ E(z) $ and distance-redshift relation$ [D(z), D'(z)] $ and then combined the reconstructions to perform the null test of$ \Omega_{K,0} $ . In their analysis, the$ H(z) $ measurements from cosmic chronometer (CC) and BAO observations as well as the Union2.1 type Ia supernovae (SNe Ia) sample were considered, and the result favors a flat universe. Owing to the increase in observational data, Yang and Gong [38] reperformed the null test using the CC$ H(z) $ data and SNe Ia Pantheon compilation, and the result is still consistent with a flat universe. In Ref. [38], the BAO$ H(z) $ data are not considered. It should be pointed out that the CC data may not constitute a reliable source of information owing to some concerns [39]. In contrast, the$ H(z) $ data from BAO observations are far more accurate and reliable. In the present work, we consider both the CC and BAO$ H(z) $ measurements; that is, we use the latest CC+BAO$ H(z) $ data and SNe Ia Pantheon sample to test the spatial flatness of the universe.Furthermore, we explore what role the future gravitational wave (GW) standard sirens and CC+BAO+redshift drift (RD) observations will play in the null test of

$ \Omega_{K,0} $ . GWs can serve as standard sirens, as the GW waveform carries the information of the luminosity distance$ D_L $ to the source [40–42]. If the source's redshift can be determined, for example, by identifying the electromagnetic counterpart of the GW event, we can then establish the$ D_L $ -redshift relation. We simulate the GW$ D_L(z) $ data based on the planned space-based GW detector, DECihertz Interferometer Gravitational wave Observatory (DECIGO) [43]. In the coming decades, with the advent of powerful optical and radio telescopes, such as the Euclid [44], Subaru Prime Focus Spectrograph (PFS) [45], Dark Energy Spectroscopic Instrument (DESI) [46,47], and Square Kilometre Array (SKA) [48], we can better measure the Hubble parameter using the CC and BAO methods. We simulate the CC+BAO$ H(z) $ data in the redshift interval$ 0<z<2.5 $ according to the observational data. Another promising way to measure$ H(z) $ in the future is the RD method [49]. We simulate the high-z Hubble data based on hypothetical RD observations of the upcoming European Extremely Large Telescope (E-ELT). We shall use the simulated GW and CC+BAO+RD data to perform the null test of$ \Omega_{K,0} $ and compare the result with that obtained using the current CC+BAO and SNe Ia data.In this work, we adopt a machine learning method, that is, the Gaussian process (GP), to reconstruct the cosmological functions. It has been widely used in cosmological research; e.g., see Refs. [37, 50–71]. The GP method allows one to reconstruct a function and its derivative from data without assuming any particular parametrization; thus, it is suitable for our purpose. The artificial neural network (ANN) has recently emerged as a promising tool for reconstructing functions [72–75]; however, as far as we know, it has difficulties in reconstructing the derivative of a function. For this reason, our research is based on GP analysis.

The remainder of this paper is organized as follows. We briefly describe the methodology in Sec. II. Sec. III presents the data we adopted. We present the results and discussions in Sec. IV. Finally, we give our conclusions in Sec. V.

-

In the homogeneous and isotropic universe, the FLRW metric is applied to describe its spacetime:

$ \begin{align} {\rm d} s^{2}=-c^2 {\rm d} t^{2}+a^{2}(t)\left[\frac{{\rm d} r^{2}}{1-K r^{2}}+r^{2}\left({\rm d} \theta^{2}+\sin ^{2} \theta {\rm d} \phi^{2}\right)\right], \end{align} $

(1) where c is the speed of light, a is the scale factor, and K is a constant that is related to the cosmic curvature by

$ \Omega_{K} \equiv -Kc^2 / (aH)^2 $ , with H being the Hubble parameter. We use$ \Omega_{K,0} $ to represent the present value of$ \Omega_{K} $ , and then$ \Omega_{K,0}>0 $ ,$ \Omega_{K,0}=0 $ , and$ \Omega_{K,0}<0 $ correspond to the open, flat, and closed universe, respectively. The luminosity distance can be expressed as$ \begin{align} D_{L}(z)=\frac{c(1+z)}{H_{0}\sqrt{\left|\Omega_{K,0}\right|}} \operatorname{sinn}\left[\sqrt{\left|\Omega_{K,0}\right|} \int_{0}^{z} \frac{{\rm d} z^{\prime}}{E\left(z^{\prime}\right)}\right], \end{align} $

(2) where

$ \begin{align} \operatorname{sinn}(x)=\left\{\begin{array}{ll} \sinh (x) , & \Omega_{K,0}>0 , \\ x, & \Omega_{K,0}=0 , \\ \sin (x), & \Omega_{K,0}<0 , \end{array}\right. \end{align} $

(3) and

$ E(z)\equiv H(z)/H_0 $ is the reduced Hubble parameter.By differentiating Eq. (2), we obtain [76, 77]

$ \begin{align} \Omega_{K,0}=\frac{E^{2}(z) D^{\prime 2}(z)-1}{D^{2}(z)}, \end{align} $

(4) where

$ D(z)=(H_0/c)D_{L}(z)(1+z)^{-1} $ is the dimensionless comoving distance. Obviously, the curvature parameter can be directly determined by using the Hubble parameter and luminosity distance according to Eq. (4). Thus, we can perform the null test of$ \Omega_{K,0} $ . Note that$ D(0)=0 $ will bring a singularity at$ z=0 $ . For simplicity, we transform Eq. (4) into$ \begin{align} \frac{\Omega_{K,0} D^{2}(z)}{E(z) D^{\prime}(z)+1}=E(z) D^{\prime}(z)-1. \end{align} $

(5) We can see that the left-hand side of Eq. (5) is non-zero when

$ z \neq 0 $ if$ \Omega_{K,0} $ is nonvanishing. Therefore, the null test of$ \Omega_{K,0} $ is equivalent to the null test of the left-hand side of Eq. (5). Following Cai et al. [37], we define$ \begin{align} \mathcal{O}_{K}(z) \equiv \frac{\Omega_{K,0} D^{2}(z)}{E(z) D^{\prime}(z)+1}. \end{align} $

(6) For a spatially flat universe,

$ \begin{align} \mathcal{O}_{K}(z)=E(z) D^{\prime}(z)-1=0 \end{align} $

(7) is always true at any redshift; thus, deviation from it implies a nonvanishing cosmic curvature. To carry out the null test of Eq. (7), we need to reconstruct the functions of

$ E(z) $ ,$ D(z) $ , and$ D'(z) $ using the observational data. In this work, we adopt the GP method to reconstruct the cosmological functions without assuming a specific cosmological model. Therefore, a cosmological model-independent test of whether$ \Omega_{K,0} $ deviates from zero is performed in this work; note here that we do not assume a specific dark energy model, but of course an FLRW model of the homogeneous and isotropic universe is adopted.GP is a non-parametric smoothing method for reconstructing functions [37, 50–71], which assumes that at each point z, the reconstructed function

$ f(z) $ is a Gaussian distribution. Furthermore, the functions at different points are related by a covariance function. We can model a dataset using GP as$ \begin{align} f(z) \sim \mathcal{G} \mathcal{P} (\mu(z), k(z, \tilde{z})), \end{align} $

(8) where

$ \mu(z) $ is the mean function, which provides the mean of random variables at each observational point, and$ k(z, \tilde{z}) $ is the covariance function, which correlates the values of different$ f(z) $ at data points z and$ \tilde{z} $ separated by$ |z-\tilde{z}| $ distance units.There is a wide range of possible covariance functions, and the choice of the covariance function actually affects the reconstruction to some extent [52]. Here, we consider the commonly used squared exponential covariance function:

$ \begin{align} k(z, \tilde{z})=\sigma_{f}^{2} \exp \left[-\frac{(z-\tilde{z})^{2}}{2 \ell^{2}}\right], \end{align} $

(9) where

$ \sigma_{f} $ denotes the overall amplitude of the oscillations around the mean and$ \ell $ gives a measure of the correlation length between the GP nodes. Both$ \sigma_{f} $ and$ \ell $ are hyperparameters, which will be optimized via GP with the observational data. Given the GP for$ f(z) $ , the GP for the first derivative is given by$ \begin{align} f^{\prime}(z) \sim \mathcal{G} \mathcal{P}\left(\mu^{\prime}(z), \frac{\partial^{2} k(z, \tilde{z})}{\partial z \partial \tilde{z}}\right). \end{align} $

(10) Therefore, it is convenient to calculate the derivative of reconstructed function. In this work, we use the publicly available

${\tt GaPP}$ code to implement our analysis [52].The specific calculation process of

${\tt GaPP}$ is as follows. For a set of input points, that is,$ \boldsymbol{Z}=\left\{z_{i}\right\} $ , the covariance matrix$ K(\boldsymbol{Z}, \boldsymbol{Z}) $ is calculated as$ [K(\boldsymbol{Z}, \boldsymbol{Z})]_{i j}=k\left(z_{i}, z_{j}\right) $ . Even in the absence of observations, we can generate a random function$ f(z) $ from GP; that is, we can generate a vector$ \boldsymbol{f^*} $ of function values at$\boldsymbol Z^* = \left\{z_{i}^*\right\}$ with$ f_{i}^{*}=f\left(z_{i}^{*}\right) $ :$ \begin{align} \boldsymbol{f}^{*} \sim \mathcal{G P}\left(\boldsymbol{\mu}^{*}, K\left(\boldsymbol{Z}^{*}, \boldsymbol{Z}^{*}\right)\right), \end{align} $

(11) where

$ \boldsymbol{\mu}^{*} $ is a prior of the mean of$ \boldsymbol{f}^{*} $ , which is set to zero in this paper. For observations, that is,$\left\{(z_{i}, y_{i}, \sigma_i)|_{i=1, 2, \ldots, N}\right\}$ , one can also use a GP to describe them. We stress that$ y_{i} $ is assumed to be scattered around the underlying function, that is,$ y_i = f(z_i)+\epsilon_{i} $ , where Gaussian noise$ \epsilon_{i} $ with variance$ \sigma_i^2 $ is assumed. Therefore, we need to add the variance to the covariance matrix:$ \begin{align} \boldsymbol{y} \sim \mathcal{G P}(\boldsymbol{\mu}, K(\boldsymbol{Z}, \boldsymbol{Z})+C), \end{align} $

(12) where

$ \boldsymbol{y} $ is the vector of$ y_i $ values and C is the covariance matrix of the data. For uncorrelated data, we use$C= \text{diag}(\sigma_{i}^{2})$ .The above two GPs for

$ \boldsymbol{f}^{*} $ and$ \boldsymbol{y} $ can be combined in the joint distribution:$ \begin{align} \left[\begin{array}{c} \boldsymbol{y} \\ \boldsymbol{f}^{*} \end{array}\right] \sim \mathcal{G P}\left(\left[\begin{array}{c} \boldsymbol{\mu} \\ \boldsymbol{\mu}^{*} \end{array}\right],\left[\begin{array}{cc} K(\boldsymbol{Z}, \boldsymbol{Z})+C & K\left(\boldsymbol{Z}, \boldsymbol{Z}^{*}\right) \\ K\left(\boldsymbol{Z}^{*}, \boldsymbol{Z}\right) & K\left(\boldsymbol{Z}^{*}, \boldsymbol{Z}^{*}\right) \end{array}\right]\right). \end{align} $

(13) Here

$ \boldsymbol{y} $ is known from observations. To reconstruct$ \boldsymbol{f}^{*} $ , one can consider the conditional distribution:$ \begin{align} \boldsymbol{f}^{*} \mid \boldsymbol{Z}^{*}, \boldsymbol{Z}, \boldsymbol{y} \sim \mathcal{G P}\left(\overline{\boldsymbol{f}^{*}}, \operatorname{cov}\left(\boldsymbol{f}^{*}\right)\right), \end{align} $

(14) where

$ \begin{align} \overline{\boldsymbol{f}^{*}}=\boldsymbol{\mu}^{*}+K\left(\boldsymbol{Z}^{*}, \boldsymbol{Z}\right)[K(\boldsymbol{Z}, \boldsymbol{Z})+C]^{-1}(\boldsymbol{y}-\boldsymbol{\mu}) \end{align} $

(15) and

$ \begin{aligned}[b] \operatorname{cov}\left(\boldsymbol{f}^{*}\right)=& K\left(\boldsymbol{Z}^{*}, \boldsymbol{Z}^{*}\right) \\ &-K\left(\boldsymbol{Z}^{*}, \boldsymbol{Z}\right)[K(\boldsymbol{Z}, \boldsymbol{Z})+C]^{-1} K\left(\boldsymbol{Z}, \boldsymbol{Z}^{*}\right) \end{aligned} $

(16) are the mean and covariance of

$ \boldsymbol{f}^{*} $ , respectively. Eq. (14) gives the posterior distribution of Eqs. (11) and (12). To reconstruct$ \boldsymbol{f}^{*} $ using the above equations, we need to know the hyperparameters$ \sigma_{f} $ and$ \ell $ , which can be determined by maximizing the logarithm marginal likelihood:$ \begin{aligned}[b] \ln \mathcal{L}= \ln p\left(\boldsymbol{y} \mid \boldsymbol{Z}, \sigma_{f}, \ell\right) =&-\frac{1}{2}(\boldsymbol{y}-\boldsymbol{\mu})^{T}[K(\boldsymbol{Z}, \boldsymbol{Z})+C]^{-1}(\boldsymbol{y}-\boldsymbol{\mu}) \\ &-\frac{1}{2} \ln |K(\boldsymbol{Z}, \boldsymbol{Z})+C|-\frac{N}{2} \ln 2 \pi. \end{aligned} $

(17) The hyperparameters will be fixed after being optimized through Eq. (17); then, the reconstructed function

$ \boldsymbol{f}^{*} $ at the chosen points$ \boldsymbol{Z}^{*} $ can be calculated from Eqs. (15) and (16). Therefore, GP is model-independent and without free parameters. -

Here we present the data used for reconstructions. We do not consider using the CMB data, because (i) we do not use observations to constrain a specific model, (ii) the reconstructions cannot be performed up to the early universe (e.g., the last scattering), and (iii) the inconsistencies in the measurements of the early- and late-universe observations (such as the well-known "Hubble tension'') should also be considered. Thus, we only use the late-universe observations in this work. In the first two subsections IIIA and IIIB, we present the CC+BAO and SNe Ia real data, and in the last two subsections IIIC and IIID, we present the GW and CC+BAO+RD mock data.

-

The Hubble parameter

$ H(z) $ , which describes the expansion rate of the universe, can be measured in two important ways. One method is to calculate the differential ages of passively evolving galaxies (usually called cosmic chronometers), which provides the model-independent$ H(z) $ measurements [100, 101]. In the framework of general relativity, the Hubble parameter can be written in terms of the differential time evolution of the universe$ \Delta t $ in a given redshift interval$ \Delta z $ :$ \begin{align} H(z)=-\frac{1}{1+z} \frac{\Delta z}{\Delta t}. \end{align} $

(18) By using the CC measurements, we can obtain their redshifts and differences in age, achieving the estimation of

$ H(z) $ . The CC method does not assume any fiducial cosmological model. We summarize the total 32 CC$ H(z) $ measurements in Table 1. The sources of these data are presented in the table. In should be pointed out that the CC data may not constitute a reliable source of information considering the concerns given in Ref. [39].Redshift z $ H(z) $

$ \sigma_{H(z)} $

Reference 0.07 69 19.6 [78] 0.09 69 12 [79] 0.12 68.6 26.2 [78] 0.17 83 8 [79] 0.179 75 4 [80] 0.199 75 5 [80] 0.2 72.9 29.6 [78] 0.27 77 14 [79] 0.28 88.8 36.6 [78] 0.352 83 14 [80] 0.38 83 13.5 [81] 0.4 95 17 [79] 0.4004 77 10.2 [81] 0.425 87.1 11.2 [81] 0.445 92.8 12.9 [81] 0.47 89 49.6 [82] 0.4783 80.9 9 [81] 0.48 97 62 [83] 0.593 104 13 [80] 0.68 92 8 [80] 0.75 98.8 33.6 [84] 0.781 105 12 [80] 0.875 125 17 [80] 0.88 90 40 [83] 0.9 117 23 [79] 1.037 154 20 [80] 1.3 168 17 [79] 1.363 160 33.6 [85] 1.43 177 18 [79] 1.53 140 14 [79] 1.75 202 40 [79] 1.965 186.5 50.4 [85] Table 1. 32

$ H(z) $ measurements (in units of$ \rm km\,s^{-1}\,Mpc^{-1} $ ) obtained with the CC method.Another method is to detect the radial BAO features using the galaxy surveys and Ly-α forest measurements [86–99]. The BAO scale provides us with a standard ruler to measure distances in cosmology. Note that the radial BAO measurements can only obtain the combination

$ H(z)r_{\rm d} $ , where$ r_{\rm d} $ is the sound horizon$ \begin{align} r_{\mathrm{d}}=\int_{z_{\mathrm{d}}}^{\infty} \frac{c_{\mathrm{s}}(z)}{H(z)} \mathrm{d} z, \end{align} $

(19) evaluated at the drag epoch

$ z_{\rm d} $ , with$ c_{\mathrm{s}} $ being the sound speed. To obtain$ H(z) $ , one first needs to determine the sound horizon. In this work, the fiducial value of$ r_{\rm d} $ is derived from the Planck 2018 CMB observations [1]. We compile the 31 BAO$ H(z) $ data in Table 2, which are summarized in Refs. [66, 102]. The dataset includes almost all the radial BAO data reported in various galaxy surveys. Note that some of the data points are correlated because either they belong to the same analysis or there is an overlap between galaxy samples. In this paper, we consider not only the central values and standard deviations of the BAO$ H(z) $ data but also the covariances among the data points, which are publicly available in the cited references. It can be seen that the BAO$ H(z) $ data are generally more accurate than the CC$ H(z) $ data. Of course, the BAO measurements also face some challenges [103], especially the environmental dependence of the BAO peak location [104, 105]. We also note that there is a noticeable systematic difference between the BAO and CC$ H(z) $ measurements [106, 107]. Therefore, it is more reasonable to reconstruct the function of$ E(z) $ using the CC and BAO$ H(z) $ data, respectively. However, to tighten the constraints on the cosmic curvature, we use both CC and BAO$ H(z) $ data to reconstruct$ E(z) $ .Redshift z $ H(z) $

$ \sigma_{H(z)} $

Reference 0.24 79.69 2.99 [86] 0.3 81.7 6.22 [87] 0.31 78.17 4.74 [88] 0.34 83.8 3.66 [86] 0.35 82.7 8.4 [89] 0.36 79.93 3.39 [88] 0.38 81.5 1.9 [90] 0.40 82.04 2.03 [88] 0.43 86.45 3.68 [86] 0.44 82.6 7.8 [91] 0.44 84.81 1.83 [88] 0.48 87.79 2.03 [88] 0.51 90.4 1.9 [90] 0.52 94.35 2.65 [88] 0.56 93.33 2.32 [88] 0.57 87.6 7.8 [92] 0.57 96.8 3.4 [93] 0.59 98.48 3.19 [88] 0.6 87.9 6.1 [91] 0.61 97.3 2.1 [90] 0.64 98.82 2.99 [88] 0.73 97.3 7 [91] 0.978 113.72 14.63 [94] 1.23 131.44 12.42 [94] 1.526 148.11 12.71 [94] 1.944 172.63 14.79 [94] 2.3 224 8 [95] 2.33 224 8 [96] 2.34 222 7 [97] 2.36 226 8 [98] 2.4 227.8 5.61 [99] Table 2. 31

$ H(z) $ measurements (in units of$ \rm km\,s^{-1}\,Mpc^{-1} $ ) obtained with the BAO method. -

The data we used for reconstructing

$ D(z) $ and$ D'(z) $ are the Pantheon compilation [13], which contains 1048 SNe Ia covering the redshift range of$ 0.001 < z < 2.26 $ . For an SN Ia, the distance modulus μ and the luminosity distance are related by$ \begin{align} \mu(z)=5 \log \left[\frac{D_{L}(z)}{\mathrm{Mpc}}\right]+25, \end{align} $

(20) and the observed distance modulus is

$ \begin{align} \mu_{\mathrm{obs}}(z)=m_{B}(z)+\alpha \cdot X_{1}-\beta \cdot \mathcal{C}-M_{B}, \end{align} $

(21) where

$ m_{B} $ is the rest-frame B-band peak magnitude;$ X_{1} $ and$ \mathcal{C} $ represent the time stretch of light curve and the supernova color at maximum brightness, respectively; and$ M_{B} $ is the absolute B-band magnitude. α and β are two nuisance parameters, which can be calibrated to zero via the${\tt BEAMS}$ with Bias Corrections method [108]. Then, the observed distance modulus can be expressed as$ \begin{align} \mu_{\mathrm{obs}}(z)=m_{B}(z) - M_{B}. \end{align} $

(22) Once the absolute magnitude is known, the luminosity distances can be obtained.

-

We simulate the GW standard sirens based on the spaceborne DECIGO and assume that the GWs are from the binary neutron star (BNS) mergers. For the redshift distribution of BNSs, we employ the form [109–115]

$ \begin{align} P(z)\propto {\frac{4\pi D_{C}^2(z)R(z)}{H(z)(1+z)}}, \end{align} $

(23) where

$ D_{C} $ is the comoving distance and$ R(z) $ is the time evolution of the burst rate:$ \begin{eqnarray} R(z) = \begin{cases} 1+2z, & z\leq 1, \\ {\dfrac{3}{4}}(5-z), & 1 < z < 5,\\ 0, & z \geq 5. \end{cases} \end{eqnarray} $

(24) It should be mentioned that there are other strategies to quantify the redshift distribution of BNSs [116–119]. We then calculate the fiducial value of the luminosity distance in the Planck best-fit flat ΛCDM model as follows:

$ \begin{align} D_{L}(z)=\frac{c(1+z)}{H_{0}} \int_{0}^{z} \frac{\mathrm{d} z'}{\sqrt{\Omega_{m}(1+z')^{3}+\Omega_{\Lambda}}}, \end{align} $

(25) where

$ H_0=67.3\ \rm km\ s^{-1}\ Mpc^{-1} $ ,$ \Omega_{m}=0.317 $ , and$ \Omega_{\Lambda}= $ 0.683. The total measurement errors of$ D_L $ consist of the instrumental error, the weak lensing error, and the peculiar velocity error, i.e.,$ \begin{align} \sigma_{D_{L}}=\sqrt{(\sigma_{D_L}^{\rm inst})^2 + (\sigma_{D_{L}}^{\rm lens})^2 + (\sigma_{D_{L}}^{\rm pv})^2}. \end{align} $

(26) For the simulation of

$ \sigma_{D_{L}}^{\rm inst} $ , we refer the reader to Ref. [120]. For the error caused by the weak lensing, we adopt the form given in Ref. [121]. The error caused by the peculiar velocity of the GW source can be found in Ref. [122]. Note that we will consider the Gaussian randomness. At each redshift point z, the mean luminosity distance is sampled from the normal distribution$ \mathcal{N}\left(D_L(z)_{\mathrm{fid}}, \sigma_{D_L(z)}\right) $ . DECIGO is expected to detect$ 10^5 $ GW events from BNSs within the redshift range of$ z\lesssim5 $ , as the expectation of its 1-year operation [43]. Considering the determination of electromagnetic counterparts, we choose a normal expected scenario, that is,$ 5000 $ GW events with redshifts [36], as an example in this work. For a comprehensive analysis of the redshift determination of GW events from optical follow-up observations, we refer the reader to Ref. [36]. For studies on the GW standard sirens from the coalescences of (super)massive black hole binaries based on the space-based GW observatories LISA, Taiji, and TianQin, as well as the pulsar timing arrays, see, e.g., Refs. [123–129]. -

In the future, with the advent of powerful optical and radio telescopes, we can better measure the Hubble parameter using the CC and BAO methods. In addition, the neutral hydrogen (HI) intensity mapping technique will enable us to measure the BAO signals more efficiently [130–134]. In this work, a total of 63

$ H(z) $ data are considered, and we are optimistic that 200 observational data at$ 0<z<2.5 $ will be realized in the coming decades. Following Ma & Zhang [135], we assume that the redshift of the CC+BAO$ H(z) $ is subject to a Gamma distribution and that the error of$ H(z) $ increases linearly with redshift. We fit the real$ \sigma_{H(z)} $ data with a first degree polynomial to obtain$ \sigma_0(z) $ , and then two lines$ \sigma_{+}(z) $ and$ \sigma_{-}(z) $ are selected symmetrically around it to ensure that most data points fall into the area between them. The mock$ \sigma_{H(z)} $ data are generated according to the normal distribution$\mathcal{N}\left(\sigma_0(z),~ \varepsilon(z)\right)$ , where$ \varepsilon(z)=[\sigma_{+}(z)+\sigma_{-}(z)]/4 $ is set to ensure that$ \sigma_{H(z)} $ falls in the area with$ 2\sigma $ probability. Then, the mean of$ H(z) $ is sampled from$ \mathcal{N}\left(H(z)_{\mathrm{fid}}, \sigma_{H(z)}\right) $ . For more details, we refer the reader to Ref. [135].Now we turn to simulating the high-z RD

$ H(z) $ data. In an observation time interval$ \Delta t $ , the shift in the spectroscopic velocity of a source$ \Delta v $ can be expressed as [136]$ \begin{align} \Delta v=\frac{c\Delta z}{1+z}=cH_{0} \Delta t\left(1-\frac{E(z)}{1+z}\right). \end{align} $

(27) Loeb [49] pointed out that the high-resolution spectrographs on large telescopes have the potential to measure the shifts in absorption-line spectra of distant quasi-stellar objects (QSOs). By observing the Ly-α absorption lines of QSOs, the E-ELT could measure the velocity shifts in the redshift range of

$ 2<z<5 $ [49, 136–143]. According to the study of Liske et al. [136], the achievable precision of$ \Delta v $ can be estimated as$ \begin{align} \sigma_{\Delta v}=1.35\left(\frac{2370}{\rm{S / N}}\right)\left(\frac{N_{\mathrm{QSO}}}{30}\right)^{-1/2}\left(\frac{1+z_{\mathrm{QSO}}}{5}\right)^{q} \mathrm{cm} \mathrm{\; s}^{-1}, \end{align} $

(28) where

$ \rm{S/N} $ is the signal-to-noise ratio of the Ly-α spectrum,$ N_{\mathrm{QSO}} $ is the number of observed QSOs at the effective redshift$ z_{\mathrm{QSO}} $ , and the exponent q is$ -1.7 $ up to$ z=4 $ and$ -0.9 $ for$ z>4 $ . In this work, we assume five RD measurements at effective redshifts$ z=2.5 $ , 3.0, 3.5, 4.0, and 4.5, with$ N_{\rm QSO} = 6 $ in each of five redshift bins [144, 145], that is, a total of 30 observable quasars. In addition, we make the assumptions of$ \Delta t=20 $ yr and$ \rm{S/N} = 3000 $ for the RD measurements. We simulate the RD$ H(z) $ data in the Planck best-fit flat ΛCDM model, and the error of$ H(z) $ is calculated from the precision of$ \Delta v $ . -

We first need to normalize the observational Hubble parameter and luminosity distance data to obtain the

$ E(z) $ and$ D(z) $ data. From Eq. (7), we have$ \begin{align} \mathcal{O}_{K}(z)=\frac{H(z)}{\hat{H}_0} {\left[\frac{\hat{H}_0}{c(1+z)}D_{L}(z)\right]}^{\prime}-1, \end{align} $

(29) where

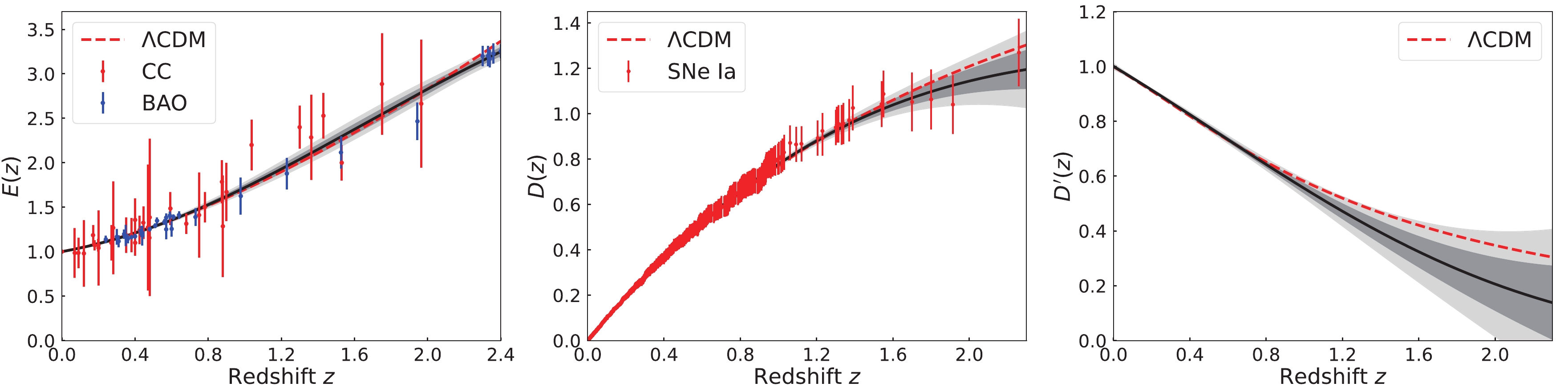

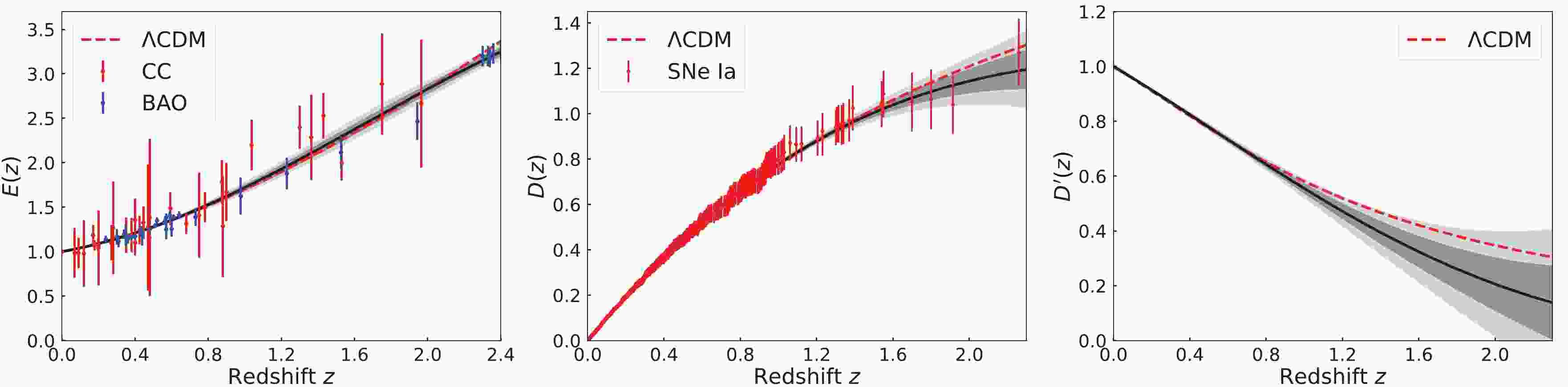

$ \hat{H}_0 $ is the normalization factor. Because the two factors can cancel each other out, their value will not influence the null test of the cosmic curvature. In this work, we adopt$ \hat{H}_0=70.0\ \rm km\ s^{-1}\ Mpc^{-1} $ to normalize the CC+BAO$ H(z) $ data as the observational$ E(z) $ . For consistency, we use the same$ \hat{H}_0 $ to normalize the SNe Ia$ D_L(z) $ data as the observational$ D(z) $ . We then adopt the GP method to reconstruct the functions of$ E(z) $ ,$ D(z) $ , and$ D^{\prime}(z) $ , and the results are shown in Fig. 1. The black line is the mean of the reconstruction, and the shaded grey regions are the$ 1\sigma $ ($ 68.3\% $ ) and$ 2\sigma $ ($ 95.4\% $ ) confidence levels (C.L.) of the reconstruction. One may find that the errors of the reconstructed function are significantly smaller than the errors of the data themselves. This is owing to the basic assumptions that the distribution of the function at each point is Gaussian and the data points are correlated by the covariance function. As can be seen, the error of$ E(z) $ does not increase significantly with redshift, owing to the relatively accurate BAO data. However, the error of$ D(z) $ and$ D^{\prime}(z) $ becomes very large at$ z>1.5 $ because the data in that region are scarce and of poor quality. In addition, all the reconstructed functions in the redshift interval$ z<1 $ are well consistent with a flat ΛCDM model with$\Omega_{ m}=0.28$ , which is adopted for comparison.

Figure 1. (color online) Gaussian process reconstruction of

$ E(z) $ (left panel) from the CC+BAO data,$ D(z) $ (middle panel), and$ D'(z) $ (right panel) from the SNe Ia data. The grey shaded regions are the$ 1\sigma $ and$ 2\sigma $ C.L. of the reconstruction. The dots with error bars are the observational data. A flat ΛCDM model (red dashed lines) is also shown for comparison.With the GP reconstructions, we can carry out the null test of the cosmic curvature. In Ref. [37], the authors applied the Monte Carlo sampling to determine

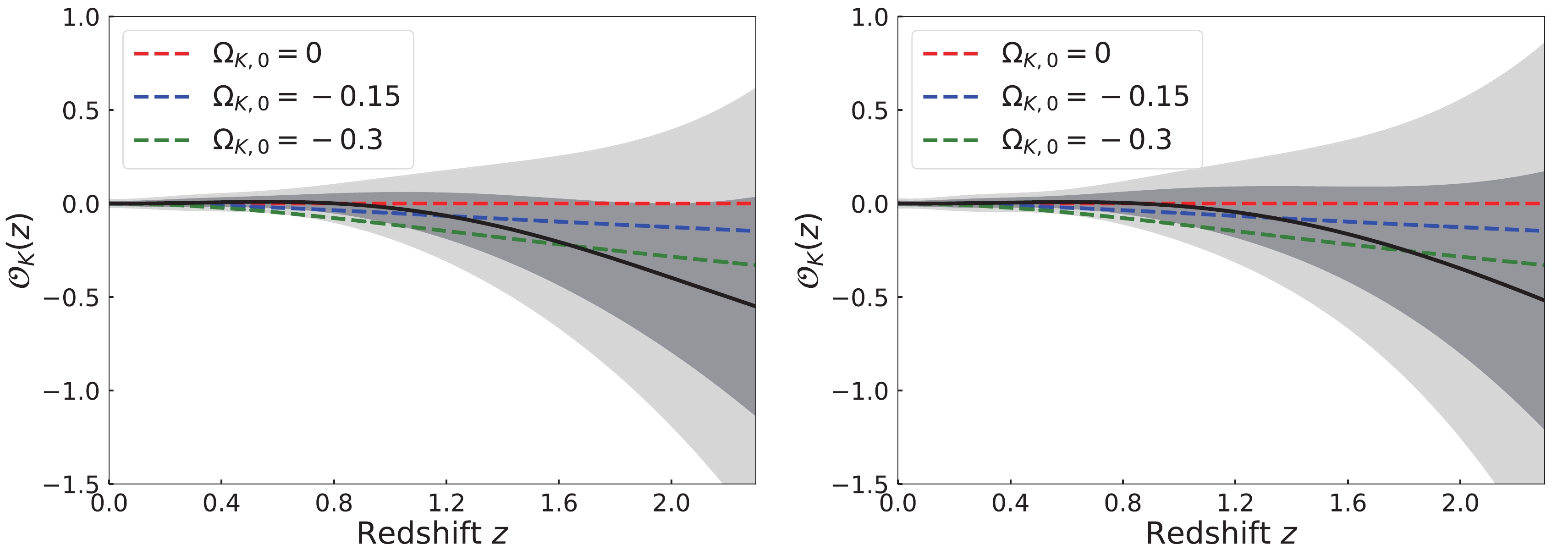

$ \mathcal{O}_{K}(z) $ . In contrast, we use the error propagation formula to calculate the error of$ \mathcal{O}_{K}(z) $ at each point z. The result is shown in the left panel of Fig. 2. The red dashed line refers to the spatially flat universe with$ \Omega_{K,0}=0 $ . It can be seen that the result is consistent with the flat universe within the domain$ 0<z<2.3 $ , falling within the$ 1\sigma $ C.L. We note that the mean of$ \mathcal{O}_{K}(z) $ is very close to zero in the redshift interval$ 0<z<1 $ ; however, it becomes increasingly negative at$ z>1 $ . In addition, the universe with$ \Omega_{K,0}=0 $ almost falls out of the$ 1\sigma $ C.L. at$ z>1.5 $ . This indicates that there is still a possibility for a spatially closed universe. Notably, the reconstruction at$ z>1.5 $ prefers a closed universe over an open one. We also plot the ΛCDM model with negative curvature in Fig. 2. As shown, a universe with$ \Omega_{K,0}= -0.15 $ can fall within the$ 1\sigma $ C.L., and a universe with$ \Omega_{K,0}=-0.3 $ can fall within the$ 2\sigma $ C.L. Note that the curves shown in Fig. 2 are plotted by assuming a ΛCDM model (with$\Omega_{ m}=0.28$ ), which is adopted here for comparison.

Figure 2. (color online) Reconstruction of

$ \mathcal{O}_{K}(z) $ from the CC+BAO and SNe Ia data. The result is based on the reconstructions via GP with the squared exponential function (left panel) and Matern92 covariance function (right panel). The grey shaded regions are the$ 1\sigma $ and$ 2\sigma $ C.L. of the reconstruction. The red dashed line corresponds to a flat universe with$ \Omega_{K,0}=0 $ . The blue and green dashed lines correspond to the cases of a ΛCDM model with$ \Omega_{K,0}=-0.15 $ and$ \Omega_{K,0}=-0.3 $ , respectively.In the GP analysis above, we only consider the squared exponential covariance function. In fact, the choice of covariance function will influence the result. To illustrate the effect, we take the Matern92 covariance function as an example, which is given by

$ \begin{aligned}[b] k(z, \tilde{z})=& \sigma_{f}^{2} \exp \left(-\frac{3|z-\tilde{z}|}{\ell}\right)\left(1+\frac{3|z-\tilde{z}|}{\ell}+\frac{27(z-\tilde{z})^{2}}{7 \ell^{2}}\right.\\ &\left.+\frac{18|z-\tilde{z}|^{3}}{7 \ell^{3}}+\frac{27(z-\tilde{z})^{4}}{35 \ell^{4}}\right). \end{aligned} $

(30) Following the process described above, we perform the null test again, and the updated result is shown in the right panel of Fig. 2. The result is still consistent with the flat universe, falling within the

$ 1\sigma $ C.L. At$ z>1 $ , the mean of$ \mathcal{O}_{K}(z) $ also deviates from zero and becomes negative. The difference is that the error of$ \mathcal{O}_{K}(z) $ obtained using the Matern92 covariance function is slightly larger than that obtained using the squared exponential covariance function. In general, the difference does exist, but it is not significant enough to change our conclusions. In the following, we adopt only the GP with squared exponential covariance to complete our analysis.The large error of

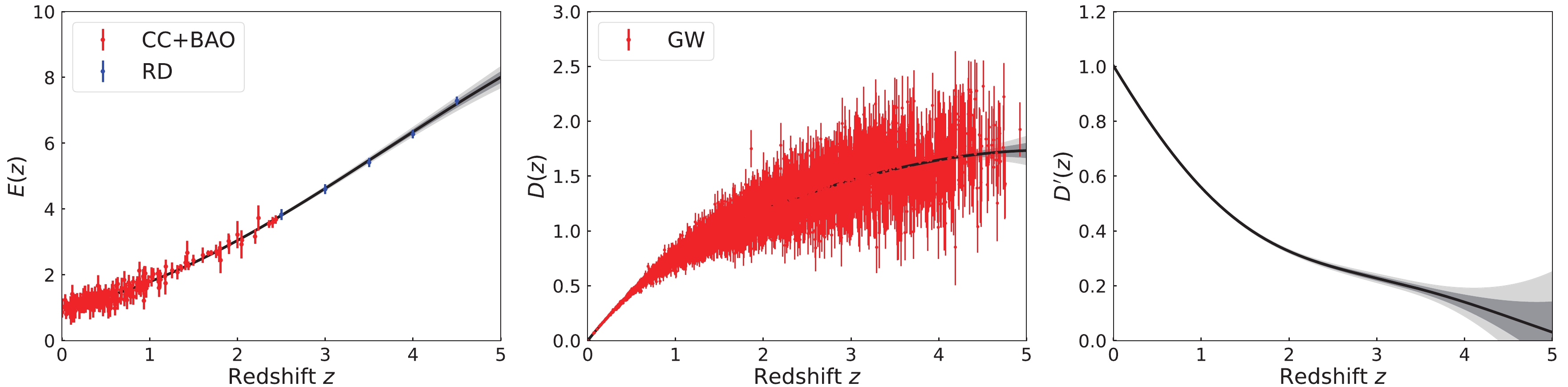

$ \mathcal{O}_{K}(z) $ leaves a large window open for a possible non-flat universe. Therefore, it is necessary to forge new cosmological probes to precisely measure the Hubble parameter and luminosity distance, tightening the constraints on the cosmic curvature. In the next decades, the GW standard siren and RD method will be greatly developed. We can adopt them to measure$ D_L(z) $ and$ H(z) $ . Then, it is worth further studying the issue of combining the GW and RD observations with the traditional CC and BAO measurements to test the spatial flatness of the universe. We normalize the simulated CC+ BAO+RD$ H(z) $ data as the$ E(z) $ data and normalize the GW$ D_L(z) $ data as the$ D(z) $ data. Note that the error of RD$ H(z) $ is relatively small and decreases with redshift, which is very helpful for us to reconstruct the cosmological function. Having obtained the datasets of$ E(z) $ and$ D(z) $ , we adopt GP to reconstruct$ E(z) $ ,$ D(z) $ , and$ D'(z) $ , and the results are shown in Fig. 3. As can be seen, the error of$ E(z) $ does not increase significantly, even up to the redshift of$ 4.5 $ . In addition, the error of$ D'(z) $ reconstructed from the GW data is significantly smaller than that from the SNe Ia data. Here, we wish to note that although the RD method is promising in measuring the Hubble parameter, the actual measurement will be fairly challenging even with powerful facilities such as the E-ELT.

Figure 3. (color online) Gaussian process reconstruction of

$ E(z) $ (left panel) from the simulated CC+BAO+RD$ H(z) $ data,$ D(z) $ (middle panel), and$ D'(z) $ (right panel) from the simulated GW$ D_L(z) $ data. The grey shaded regions are the$ 1\sigma $ and$ 2\sigma $ C.L. of the reconstruction. The dots with error bars are the simulated data.With the reconstructions of

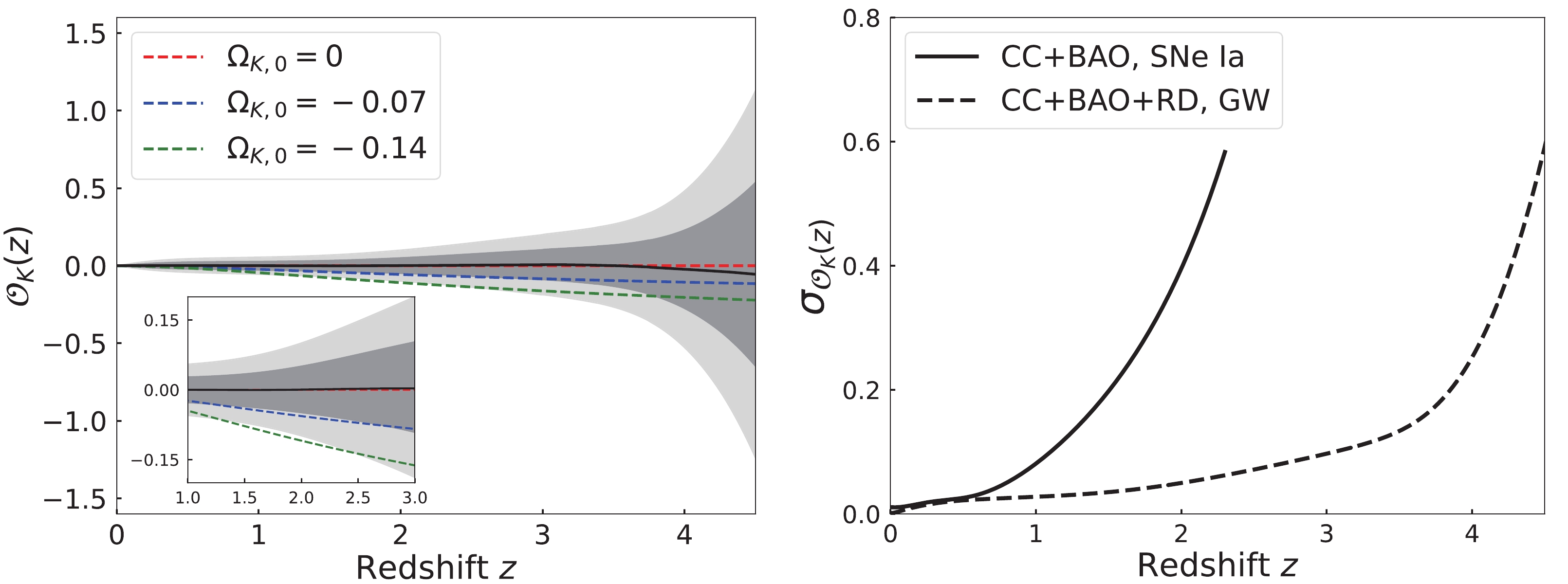

$ E(z) $ and$ D'(z) $ , we use the error propagation formula to determine$ \mathcal{O}_{K}(z) $ , and the result is shown in the left panel of Fig. 4. We see that the mean of$ \mathcal{O}_{K}(z) $ is very close to zero in the redshift interval$ 0<z<4.5 $ . Some weak deviations are mainly due to the consideration of Gaussian randomness in the simulations. The result strongly favors a flat universe, which is consistent with the assumed flat ΛCDM model. Note that we only consider$ \mathcal{O}_{K}(z) $ at$ 0<z<4.5 $ . Even though we have reconstructed the function of$ E(z) $ at$ 0<z<5 $ , the part of$ z>4.5 $ is extrapolated, whose accuracy cannot be guaranteed. In contrast, the GW$ D(z) $ data at$ z>4.5 $ are scarce and of poor quality; thus, the reconstruction is not convincing. We also plot the ΛCDM model with negative curvature in the left panel of Fig. 4. As shown, the reconstruction at$ 1.5<z<2.5 $ can rule out the universe with$ \Omega_{K,0}=-0.07 $ at the$ 1\sigma $ C.L. and the universe with$ \Omega_{K,0}=-0.14 $ at the$ 2\sigma $ C.L. Similarly, we can rule out the universe with positive curvature in this way. We confirm that if the number of CC+BAO$ H(z) $ data reaches 300, the reconstruction at$ 1.5<z<2.5 $ can rule out the universe with$ \Omega_{K,0}=-0.05 $ at the$ 1\sigma $ C.L. and the universe with$ \Omega_{K,0}=-0.09 $ at the$ 2\sigma $ C.L. We compare the$ 1\sigma $ errors of$ \mathcal{O}_{K}(z) $ derived from the different data in the right panel of Fig. 4. It can be seen that the error of$ \mathcal{O}_{K}(z) $ derived from the future {CC+BAO+RD, GW} data is significantly smaller than that derived from the current {CC+BAO, SNe Ia} data. Concretely, for example, the error provided by {CC+BAO+RD, GW} is smaller than that given by {CC+BAO, SNe Ia} at$ z=1 $ by 66.6% (and at$ z=2 $ by$ 87.3\% $ ). Moreover, the error of$ \mathcal{O}_{K}(z) $ derived from {CC+BAO, SNe Ia} increases rapidly at$ z>1.5 $ , while the error of$ \mathcal{O}_{K}(z) $ from {CC+BAO+RD, GW} does not increase rapidly until$ z\sim4 $ . All these analyses indicate that with the synergy of multiple high-quality observations in the future, we can better determine the spatial topology of the universe.

Figure 4. (color online) Reconstruction of

$ \mathcal{O}_{K}(z) $ from the simulated GW and CC+BAO+RD data (left panel). The grey shaded regions are the$ 1\sigma $ and$ 2\sigma $ C.L. of the reconstruction. The red, blue, and green dashed lines correspond to the universe with$ \Omega_{K,0}=0 $ ,$ \Omega_{K,0}=-0.07 $ , and$ \Omega_{K,0}=-0.14 $ , respectively. The right panel shows the$ 1\sigma $ error of the reconstructed$ \mathcal{O}_{K}(z) $ from the observational {CC+BAO, SNe Ia} data and simulated {CC+BAO+RD, GW} data, respectively.In this work, we simulated only five RD measurements at high redshifts by observing the Ly-α absorption lines of QSOs. It should be pointed out that the SKA Phase I can measure the RD at

$ 0<z<0.3 $ by observing the HI emission lines of galaxies [146–148]. However, owing to the excellent performance of CC+BAO in reconstructing$ E(z) $ , we did not consider this case. In addition, we did not consider the future observations of supernovae, because the GW data can reconstruct$ D(z) $ and$ D'(z) $ very well, as shown in Fig. 3. We note that the GW standard siren method also has the potential to measure the Hubble parameter [120]. In principle, the observations of luminosity distances to GW sources across the sky should not be directional. However, mainly owing to the local motion of the observer, there are tiny anisotropies in the luminosity distance, which enables us to measure$ H(z) $ . In this work, we considered a conservative scenario, that is,$ 5000 $ GW events with determined redshifts. In very optimistic scenarios, DECIGO is expected to detect$ 10^5\sim10^6 $ GW events with determined redshifts. If that can be done, we can reconstruct the functions of$ D(z) $ and$ D'(z) $ with breathtaking precision and measure the Hubble parameter at$ 0<z\lesssim3 $ with an accuracy of a few percent [120]. Then, we can test the spatial flatness of the universe in a cosmological model-independent way using only the GW data. We plan to explore this possibility in a future work. -

In this paper, we adopt a cosmological model-independent method to test whether the cosmic curvature

$ \Omega_{K,0} $ deviates from zero. We use the Gaussian process method to reconstruct the reduced Hubble parameter$ E(z) $ and the distance-redshift relation$ [D(z),D'(z)] $ , independently. In the reconstruction, we do not assume any specific cosmological model. By combining the reconstructions of$ E(z) $ and$ D'(z) $ , we can determine$ \mathcal{O}_{K}(z) $ , which is zero at any redshift for a spatially flat universe with$ \Omega_{K,0}=0 $ . Thus, we can carry out the null test of$ \Omega_{K,0} $ . We adopt the latest CC Hubble data, radial BAO Hubble data, and Pantheon SNe Ia data to implement our analysis.Our result is consistent with a universe with

$ \Omega_{K,0}=0 $ within the domain of reconstruction$ 0<z<2.3 $ , falling within the$ 1\sigma $ confidence level. We stress that the reconstruction favors a flat universe at$ 0<z<1 $ ; however, it tends to favor a closed universe at$ z>1 $ . In this sense, there is still a possibility of a closed universe. The error of the reconstructed function increases rapidly at$ z>1.5 $ because of the poor-quality observational data in that region; thus, it is necessary to forge new cosmological probes to precisely measure the luminosity distance and Hubble parameter. The GW standard siren and redshift drift observations that can be used to measure$ D_L(z) $ and$ H(z) $ will be greatly developed in the next decades. We simulated the GW standard siren and RD data based on the hypothetical observations of the upcoming DECIGO and E-ELT, respectively. The traditional methods for measuring the Hubble parameter are also promising, and we simulated the CC+BAO$ H(z) $ data for the next decades. Combining these mock data, we performed a flatness test of the universe. We find that with the synergy of multiple high-quality observations in the future, we can tightly constrain the spatial geometry of the universe or exclude the flat universe with$ \Omega_{K,0}=0 $ . -

We thank Purba Mukherjee, Bo-Yang Zhang, Bo Wang, Tian-Nuo Li, Shang-Jie Jin, Ling-Feng Wang, and Ze-Wei Zhao for fruitful discussions. We sincerely thank Purba Mukherjee for providing us with the BAO data.

Null test for cosmic curvature using Gaussian process

- Received Date: 2023-01-25

- Available Online: 2023-05-15

Abstract: The cosmic curvature

Abstract

Abstract HTML

HTML Reference

Reference Related

Related PDF

PDF

DownLoad:

DownLoad: